Sparse’s Phone Orchestra Creates Symphonies Among Strangers

David Cihelna and Gene Han are working to turn an ocean of networked mobile devices into a sea of tranquility. Partnering at the NYU Tisch School of the Arts’ Interactive Telecommunications Program (ITP), the two developers conceived Sparse, a “phone orchestra” that uses smartphones as a collective speaker system.

Walking into a dimly lit space, participants direct their handheld browsers to a predetermined URL that distributes a single piece of a multi-part audio stream. Then, illuminated in a calming blue gradient glowing brighter with each screen, each person adds to the collaborative amplifier, morphing sonic relationships as they circulate.

Premiering at the Tribeca Film Festival Interactive Playground 2015, then deployed again at Houston’s Day for Night Festival 2015, Sparse has taken field recordings of the New York City Subway and custom compositions and bathed audiences in symphonic cooperation. Every new person entering the room, or shifting position, reveals melody and dimension as the sounds from their phone speaker integrate and collide with others. With nothing to look at but each other, the congregation gets planted firmly in the moment.

Cihelna and Han see coding as the next frontier for music, a way to remake the idea of the “instrument” and rethink the role of the smartphone as a unifier rather than an isolator. Equally a fan of Major Lazer, trap music, string quartets and cinematic surround sound, Cihelna believes there is potential for Sparse at art and dance music festivals alike, offering up both an oasis from and augmentation of headline performances. We spoke to Cihelna about inspirations, optimizing performance nodes, and adapting Sparse for a high-volume (in both the device and decibel sense) environment.

When you set out to stage a collective performance, were you inspired by multidirectional efforts from the past—the Flaming Lips’ 1996 Parking Lot Experiments, quadrophonic recordings, an art installation, or anything else site-specific—or is your focus purely contemporary?

As we were showing Sparse, I definitely heard about the Flaming Lips’ thing [where the band gathered dozens of people and their cars in a parking garage and had each of them simultaneously play a uniquely prepared tape]. But when I created this with my partner, Gene Han, we were thinking of how to make something isolating, like a phone, more social and able to create connection between people.

We were coming from more of a tech perspective—thinking about how, when you put 20 people in a room together, almost everyone will have a smartphone. But the connection is nonexistent or surreal—a little dot that knows someone else is there. So we wanted to create a physical manifestation that acknowledges the fact that everyone has a smartphone and theoretically are connected in real space.

Historically, we were thinking more of things like interactive cinema and music. My background is in music, and the idea that I or other musicians could use their audiences’ cellphones to redistribute music in a space is exciting to me. I guess we really thought of it more from a user perspective, a tech sense, and an experiential sense.

I would say the one thing that factored in from a music perspective was when James Murphy did this huge sound setup with multiple speakers at Sonar in Barcelona, and it was inspiring to encounter people in a space, listening and maybe even dancing—but instead of being tethered to a directional approach, you could move around and feel a speaker in any direction.

And with Sparse, the primary goal is similarly to break the tyranny of the artist–audience dynamic?

Exactly. I think it’s really about the feeling within a space. The reason we called it a “spatial performance” is because the “performer”—whether prerecorded or live through our system—happens next to you, in front of you or behind you, rather than a unified sound source in the front. It’s breaking down a dichotomy between where the performance happens traditionally and where it could happen in the future.

What we’ve seen is people bunching their phones with friends and strangers in the crowd, putting their heads together to get different combinations of the same song. Essentially, there is nothing else for you to do on your phone once you join, other than be in the space and listen. It’s supposed to be a very sparse experience—hence the name—but it’s also an experience that deepens the connection between people using devices that typically drive a wedge between direct interactions.

To launch Sparse, did you search for a specific space or customize one?

There are really two factors: the type of space and the number of people in it. One of the restrictions we deal with is that smartphones that people have in circulation don’t get that loud. So, there is a fine line between finding a space small and quiet enough to really grow the sound when people are in there, and having a room big enough for enough people so that the sheer number of phones raises the amplitude.

We initially did one in a very, very small, dark room with maybe 25–30 people. Then we did it in larger areas with lots of background noise, and that was interesting, too. It’s really about finding the right amount of people. It gets better and better the more people are involved, because of sheer sonic quantity. If we split a piece of music into five stems and broadcast them across 20 people, that sounds great. But if we split it into 50 pieces and broadcast to 100 people, it’s amazing. That’s the experience we’re going for.

Is what piece each person gets complete randomization?

Kind of, but we highlight and prefer certain parts. We know in the frequency spectrum bass sounds—sounds below 300 kHz—don’t work well on phone speakers. So, we shift it up a little, EQ the sound, and do some post-processing. And if we prefer to augment certain parts, more phones get those hard-to-hear, key sounds. In a sense, we’re doing our own mix. In a song, you’d mix the vocals up. But what we’re doing is, instead of increasing the volume of the voice, we’re giving more phones the voice so it gets stronger. What seems like a small element on its own can make a huge impact when it’s rendered with other bundles of the score.

What do you stand up on the backend to establish the mix?

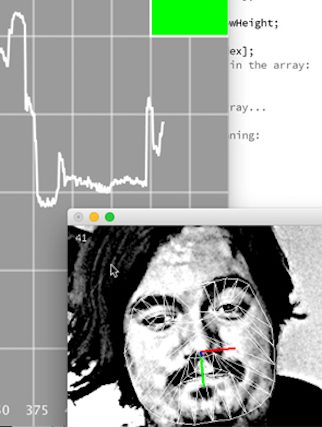

A server element listens for anyone that connects to our specially configured URL and continually sends them a clock—1, 2, 3, 4—whenever they connect on the client side. The server also decides what kind of stem they get, and it syncs everyone together.

The Web Audio API [application program interface] allows us to do things with a remote server we couldn’t do before, providing us with all these modular routing options, effects and visualization possibilities. It’s responsible for a slew of experimental content on the web.

What goes into producing and treating the actual stems for a Sparse composition?

For Sparse, I compose the sounds in Cubase, Ableton Live, and also with Native Instruments’ Maschine. As I was working on it, I noticed two modes that worked the best. The first is having some sort of beat element, because you start to get these complex, polyrhythmic connections between different devices. If you split a very complicated drum loop into separate parts and attempt to recombine them, they don’t fall back to the same grid, and things that made sense become something quite different—especially in the snares and hi-hats and toms.

The second mode is splitting arrangements. When we were prototyping, I composed something for a string quartet, and I split each separate string part. We played it back with a group of 45 people, and that’s interesting, because the dynamics of the performance are much closer to being in front of a string quartet in a real space than hearing it on headphones or speakers. However, the focal point still ebbs and flows around the room, creating this unique ocean of sound.

Is it more satisfying to see the parts come together or fall apart?

I think it’s a combination. What we’ve done with the pieces we showed up until now was something that started in a composed, predictable manner, like splitting chords into their respective elements and distributing those, and then making it fall apart and become something you have to listen to multiple times to get. So, I definitely do nudge it, and at some point we break it down and say, we got it, let’s go on to something new.

What has to be remembered is it’s an installation, and people may come in for two to three minutes, so if the music in a space keeps repeating, what keeps them there if they can’t play with the installation? It’s important to keep people on their toes, so I tend to not have the same pattern repeat for more than 20–30 seconds; then you break it down, add something more complicated. For one of the pieces we were playing, it changed every 25 seconds; by the fourth time, it was chaotic and a mess, distorted and strange, and it got people into a different mindset.

The experience is constantly changing, offering something as unified as it is personalized.

Sparse performances have involved prerecorded tracks, but could you integrate a producer or DJ using a clip-based production tool like Ableton Live to trigger stems and provide the master clock?

That’s exactly what we want to deal with, actually. If there’s already some kind of MIDI transfer system we could use in the performance, it makes it so much easier. We could easily connect something in Ableton to a web server and get it to do what we wanted it to do, versus getting a stream of mic’d instruments and passing them through our system.

It would be amazing to partner with an artist [and] curate a venue where songs could be remade and adapted live.

What would allow you to configure Sparse for more high-energy, signal-saturated environments?

One of the major problems we face technologically is that older phones tend to not be great with this. That’s something we are continuously improving in the code itself.

Finding the right spot—and the best time—at any event is crucial for the performance and the participants. When we set up at the Day for Night Festival, the farther we were from major stages, the better to reduce sound pollution. The more insulation a room provides, the better. Also, the later in the evening, the louder the music, so the less likely it is to work.

It would be an interesting challenge to see how this could be integrated into a more continuous setup around a festival. Maybe you could take it out any time and start your own small sound groups—that’s something to consider.